Home > On-Demand Archives > Talks >

Things my mother told me about The Sampled data Fourier Transform

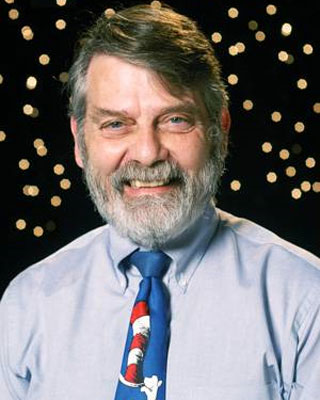

fred harris - Watch Now - DSP Online Conference 2025 - Duration: 01:33:44

In one convenient description, an N‑dimensional vector describes a point in N‑space as a weighted summation of equally spaced samples. We call that description the time‑domain representation. The Discrete Fourier Transform (DFT) is a transform that describes that same point in N‑space as a weighted summation of cosines and sines. We call that description the frequency‑domain representation. The point is the same in both coordinate systems, but the basis sequence that describes the point is different.

Think of a point in three‑dimensional space described in Cartesian coordinates (x, y, z). The same point can also be described in polar coordinates (R, θ, φ). Both 3‑tuples describe the same point, but in different coordinates. We select the coordinate system to describe that point in either form and can change forms for convenience of a different perspective. The inverse DFT (IDFT) reverses the mapping, converting the frequency‑domain description to the time‑domain description. The DFT is a transform with well defined and useful properties.

The DFT is implemented as a set of length-N inner products of the input vector on a set of N orthogonal basis vectors. Each inner product requires N complex multiplies and adds, which means the set of N inner products requires N2 complex multiplies and adds. If N is small, say 102, then 102 complex products is manageable. If N is large, say 103, then 106 complex products may give us cause for second thoughts.

The Fast Fourier Transform (FFT) is an algorithm that performs the DFT operations with, on the order of, mN complex multiplies and adds. In one form of the algorithm the scale factor m is log₂(N)/2 and in another form m is 2. The most common constraint we learn when we first study the FFT algorithm is that N must be highly composite, the product of many factors. Misconception number 1. The most well‑known version of the FFT is the Cooley–Tukey radix‑2 algorithm. This algorithm is so pervasive that we are often misguided to believe that N must be a power of 2, i.e., 2³. Misconception number 2. FFTs come in all sizes, including prime lengths.

We will review the FFT in three primary forms — the Cooley–Tukey, the Good–Thomas, and the Winograd — with a few essential equations and with many figures and images to show why and how they differ.

This guide was created with the help of AI, based on the presentation's transcript. Its goal is to give you useful context and background so you can get the most out of the session.

What this presentation is about and why it matters

Fred Harris' talk surveys the family of algorithms that compute the Discrete Fourier Transform (DFT) efficiently. He explains why the naive DFT is expensive, what the Fast Fourier Transform (FFT) really is, and how several alternative algorithmic families (Cooley–Tukey, Good–Thomas/prime-factor, Rader, Bluestein and Winograd) attack the same mathematical problem in different ways. Beyond algorithm names, Harris emphasizes practical engineering trade-offs: multiplicative complexity (how many multiplies you must do), table sizes (how many trig values you need), numerical precision issues, and when alternative factorizations are preferable in hardware or constrained environments.

Why it matters in practice

Fourier transforms are used everywhere in signal processing, communications (OFDM), image and audio compression (JPEG/MPEG/MP3), radar and remote sensing (SAR), and many embedded real‑time systems. Choosing an FFT algorithm affects run time, memory, numeric stability, and implementability on FPGAs, GPUs, or space‑qualified hardware. This presentation links theory to engineering examples (e.g., large 160k and 33M transforms), so you get intuition about which algorithmic family to pick for a given constraint.

Who will benefit the most from this presentation

- DSP engineers implementing transforms on hardware (FPGAs, DSPs, space‑qualified computers).

- Graduate students and advanced undergraduates studying FFT algorithms and algorithmic complexity.

- Developers optimizing signal‑processing code (C, MATLAB, GPU) who need to choose transform sizes and factorizations.

- Researchers curious about prime‑length and minimal‑multiplication transform techniques.

What you need to know

To get the most from the talk, make sure you are comfortable with these basic ideas:

- Definition of the DFT: $X[k]=\sum_{n=0}^{N-1} x[n]\,e^{-j\,2\pi kn/N}$. The inverse is the conjugate form with a 1/N scale.

- Complexity classes: the direct DFT costs $O(N^2)$ complex operations; common FFTs reduce that (e.g., radix‑2 Cooley–Tukey gives $O(N\log N)$ behavior in practice).

- Decimation concepts: decimation‑in‑time and decimation‑in‑frequency are dual factorizations that lead to butterfly operations and twiddle multipliers.

- Zero‑stuffing (upsampling) and zero‑padding: inserting zeros changes the sample spacing and spectral copy spacing — a key idea for interpolation and for converting transforms into convolutions.

- Chinese Remainder / prime‑factor mappings: when N factors into relatively prime parts, Good–Thomas (prime‑factor) methods reorder indices ("bishop's/knight's tour") to avoid twiddle tables and reuse small table‑free transforms.

- Rader and Bluestein: methods to handle prime N by converting the DFT into a convolution that can then be computed via an FFT (or other convolution method).

- Winograd: focuses on minimizing multiplicative complexity for short convolutions and small prime transforms; often achieves only about 2 multiplies per data sample in the short‑length case, at the expense of more additions and bookkeeping.

- Practical tradeoffs: table size, precision (floating point mantissa), memory layout (row/column, load/unload), and the cost of twiddle multiplications all determine the best choice for a given N and platform.

Glossary

- DFT — Discrete Fourier Transform: maps N time samples to N frequency samples using complex exponentials.

- DTFT — Discrete‑Time Fourier Transform: continuous (in frequency) transform of a discrete sequence; spectrum is periodic.

- FFT — Fast Fourier Transform: any algorithmic family that computes the DFT with significantly fewer operations than naive $O(N^2)$.

- Cooley–Tukey — The common radix‑based FFT family that factors N into smaller DFTs and uses twiddle factors and butterflies.

- Good–Thomas (Prime‑Factor) — A permutation/factorization method for relatively prime factors that avoids twiddle factors and uses small table‑free transforms.

- Rader transform — Converts a prime‑length DFT into an $(N-1)$‑point circular convolution using a reindexing based on generators modulo N.

- Bluestein (Chirp‑Z) — Another convolution‑based method that computes arbitrary length DFTs by embedding into a convolution and using a power‑of‑two FFT.

- Winograd — Algorithms that minimize the number of multiplications for small convolutions/DFTs at the cost of more additions and index transforms.

- Twiddle factor — Complex phase multipliers (powers of $e^{-j2\pi/N}$) applied between subtransform stages to correct phase offsets.

- Zero‑stuffing / Zero‑padding — Inserting zeros between or after samples to change sampling rate or to prepare sequences for convolution/FFT methods.

A few nice words about the presentation

Fred Harris combines deep historical perspective, down‑to‑earth engineering anecdotes, and clear diagrammatic intuition. He doesn’t just list algorithms; he illustrates when and why each family matters with concrete examples (large transforms on constrained hardware, table‑size failures, and practical factor choices). If you want intuition that connects mathematical structure to implementation tradeoffs — and you appreciate stories that make algorithms memorable — this talk will repay your attention.

What a lecture! Amazing!

I am going to trick my son now in chess. I’ll play over GF(2^3) and he’ll play as usual. We’ll see who wins with less operations. Kge8++