Home > On-Demand Archives > Talks >

Resampling Filters: Interpolators and Interpolation

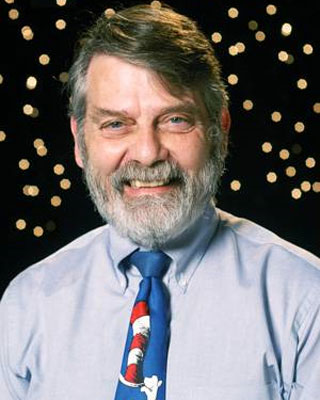

Fredric J Harris - Watch Now - DSP Online Conference 2023 - Duration: 02:06:06

The first time I had to design an interpolator to change the sample rate of an existing time series from one sample rate to another sample rate was in the early 1960s. A group of engineers were determining the acoustic signature of a ship in San Diego Harbor. Two small vessels circled the ship and collected samples of the ship’s sounds to be cross correlated off-line in a main frame computer. Imagine our surprised response when we realized that the two collection platforms had operated at different sample rates to collect their versions of the sampled data signal: 10 kHz and 12 kHz! You can’t correlate time sequences that have different sample rates! It was an interesting learning process.

My Webster’s Second Collegiate Dictionary lists, in its third entry, a math definition of interpolate as: “To estimate a missing functional value by taking a weighted average of known functional values at neighboring points.” Not bad, and that certainly describes the processing performed by a multirate filter. Interpolation is an old skill that many of us learned before the advent of calculators and key strokes replaced tables of transcendental functions such as log(x) and the various trigonometry functions. Take for example the NBS Applied Mathematics Series, AMS-55 Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables by Abramowitz and Stegan. This publication contains numerous tables listing functional values of different functions, sin(θ) for example, for values of θ equal to …40.0, 40.1, 40.2,….etcetera. Interpolation is required to determine the value of sin(θ) for the values of θ between the listed values. Interpolation was such an important tool in numerical analysis that three pages in the introduction of the handbook are devoted to the interpolation process. Interpolation continues to be an important tool in signal processing and we now present and discuss the DSP filtering description of the interpolation process.

The ability to change sample rate of a sequence to another selected sample rate has become the core enabler of software defined radios and of sampled data communication systems. Synchronizing remote clocks on moving platforms, adjusting clocks to remove clock offsets due to environmental, manufacturing tolerances, and Doppler induced frequency shifts are but the tip of the many things we accomplish with arbitrary interpolators. Let’s have a cheer, Here, Here, for interpolators!

Slides and Matlab scripts provided by fred can now be downloaded from the left-hand side column (at the bottom)

Insightful, thought-provoking as always. Such a valuable DSP treasure ! Almost a Super Man. Many thanks.

Thanks John, I'm glad to hear your enjoyed it.

Stephan reminded me that I have to keep sharing the fun I have doing what we do!

fred

When you are interpolating error values at near resampled data points, is dither not also an appropriate technique instead of/with left-neighbour interpolation? Or is it too computationally expensive to consider most of the time?

PhilM,

Good question,

A dither will not do the same degree of artifact suppression as the linear interpolation using the slope between adjacent interpolated samples. The dither will smear the spectral artifacts to whiten their contribution but the derivative correction will squish down their spectral contributions. The dither breaks up periodic errors replacing them with random errors. At the moment I can't see periodic errors in the interpolator. I'll give it some more thought!

Hi Fred,

Fantastic presentation as usual! Really enjoyed it and learned a lot.

Not a polyphase filter question, but a Farrow filter question for you...

If I want to use a Farrow structure to interpolate over a wide range of rates (e.g. 1-1024 with 10-bit fractional precision, for instance), what is the criteria for the prototype filter that I use for the polynomial approximation based sections? That is, what are its bandwidth and transition band requirements?

The protype filter must change for different rate ranges or stopband requirements, right?

I'm trying to understand the design methodology behind the prototype filter design.

Thank you very much,

Sean